A significant number of blog posts have been devoted to the subject of Big Data Analytics, so it is a good time to revert back to what Advanced Analytics delivers to the business.

I view Advanced Analysis as the use of data and models to provide insights to guide decisions. Advanced Analysis is therefore about data analysis, and for advanced analytics to be useful, quality data from trusted sources is critical. This is the key difference with Big Data analysis which is focused on large and varied formats of data, accumulating at high velocities.

Advanced Analytics requires structured data. Where there is structured data, Enterprise Content Management would help, but where there is no structure in the data, the analysis will be fundamentally flawed. The saying "garbage in, garbage out" holds true for Advanced Analytics.

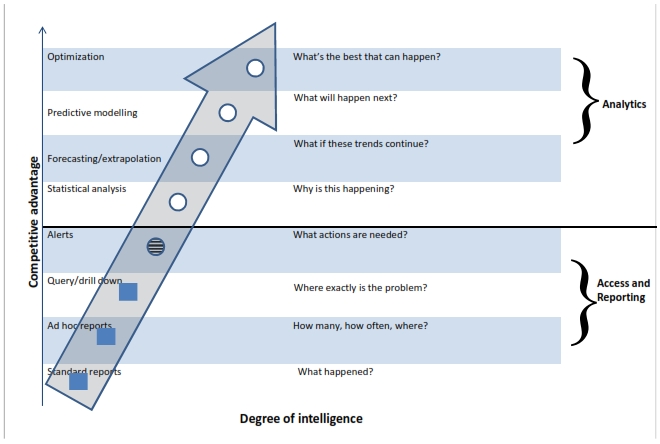

Advanced Analytics begins with the analysis of data. Once analysed, it is presented in executive dashboards with advanced visualisation capabilities. There are 4 terms noteworthy terms in the definition of Advanced Analytics...data, models, insights and decisions.

With Advanced Analytics, mathematical algorithms are used to evaluate data a context relevant to the user. Complex mathematical models discover patterns in the data which would have previously been unknown.

Models are the complex mathematical formulae used to augment the data available to knowledge and insights. The purpose of the models is to uncover insights that drive better decision making.

I have been engaged in conversations with business leaders, demonstrating how PureApplication System can be used to accelerate the delivery of Advanced Analytics capabilities. Using patterns of expertise, we are able to significantly reduce time to value, enabling line of business executives to benefit from innovative IT capabilities in less than a couple of months, where it would have previously taken years to implement.